共计 21699 个字符,预计需要花费 55 分钟才能阅读完成。

迁移版本

Hadoop 源版本:3.0 Hadoop 目标版本:3.0

Hive 源版本:hive-common-2.1.1 Hive 目标版本:3.1.2

迁移准备

网络打通

通过专线或者 VPN 打通源集群和目标集群的网络,使得源集群中所有服务器可以和目标集群所有服务器通信,方便后续进行数据拷贝。

迁移步骤

1、先迁移 HDFS 文件

通常 HDFS 数据体量较大,迁移时间较长,所以建议先迁移 HDFS 文件

2、再迁移 Hive 元数据

HDFS 文件完成全量迁移后,即可以考虑大数据集群停止新的数据写入。停止数据写入后,即可开始迁移 Hive 元数据以及迁移增量的 HDFS 数据(HDFS 全量迁移期间很可能有新数据写入,业务团队根据数据写入情况评估)

迁移方案

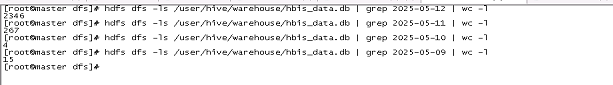

集团二期数据现状

从上图可知,Hive 数据基本为新写入数据,如果按照全量 + 增量的方式迁移,不太合适

- 方式一:停止业务输入,设置快照,将快照数据全量迁移到新集群。

- 方式二:不停业务,允许部分数据差异,指定时间点快照迁移到新集群。

按照模拟测试结果:

- 数据量:57G 迁移速度:121MB/s 耗时:8min

推算:

- 数据量:7 TB(即 7,000 GB)

- 迁移速度:450 MB/s(按照实际结果)

耗时大约:4.5 H

1、迁移 HDFS 数据

(1)迁移工具介绍使用 HDFS DistCp 远程分布式复制数据到目标新集群。DistCp 是一个专门用于集群内部和跨集群服务数据的工具,底层利用 MapReduce 把数据复制拆分为 Map 任务,进行分布式拷贝。

(2)迁移准备

1. 非快照式迁移

停止源和目标 HDFS 相关文件的写入操作使用 DistCp 进行数据复制的前提是:== 源和目标 HDFS 中的文件都不能在写的状态 ==,否则 DistCp 拷贝可能会失败。

2. 快照式迁移

提前对数据目录打好快照,迁移快照即可。

(3)全量迁移

登录 Master 节点执行远程分布式全量数据拷贝

(4)增量迁移

登录 Master 节点,执行远程分布式增量数据拷贝

迁移 Hdfs 数据

增量迁移测试(迁移时停止业务)

(1)准备数据

数据量:50000 条

创建分区建表语句和模拟数据脚本

EATE TABLE user_data_fenqu (

id INT,

name STRING,

province STRING,

city STRING

)

PARTITIONED BY (age INT);模拟数据并导入 hive

#!/bin/bash

# 设置 Hive 执行命令的环境变量(根据实际情况配置)export HIVE_HOME=/path/to/hive

export PATH=$HIVE_HOME/bin:$PATH

# 数据生成函数

generate_data() {

# 生成指定数量的模拟数据

local count=$1

local file_path="/tmp/user_data.csv"

# 清空文件内容

> $file_path

# 生成模拟数据并保存到文件

for ((i=1; i<=count; i++))

do

id=$i

name="用户 $id"

province="省份 $(($i % 5 + 1))"

city="城市 $(($i % 10 + 1))"

age=$((18 + $i % 50)) # 随机生成 18-67 岁之间的年龄

echo "$id, $name, $province, $city, $age" >> $file_path

done

echo "生成了 $count 条模拟数据到 $file_path"

}

# 插入数据函数

insert_data_to_hive() {

local count=$1

local partition_age=$2

# 插入数据到 Hive 表的分区中

hive -e "

use user_info_db;

LOAD DATA LOCAL INPATH '/tmp/user_data.csv' INTO TABLE user_data_fenqu PARTITION (age = $partition_age);

"echo"$count 条数据已成功插入到 Hive 表的年龄 $partition_age 分区中 "

}

# 主脚本流程

read -p "请输入要生成的数据条数:" data_count

read -p "请输入要插入的年龄分区:" partition_age

# 生成数据

generate_data $data_count

# 插入数据到 Hive

insert_data_to_hive $data_count $partition_age

# 清理临时文件

rm /tmp/user_data.csv

echo "脚本执行完毕"执行完毕,数据如下所示

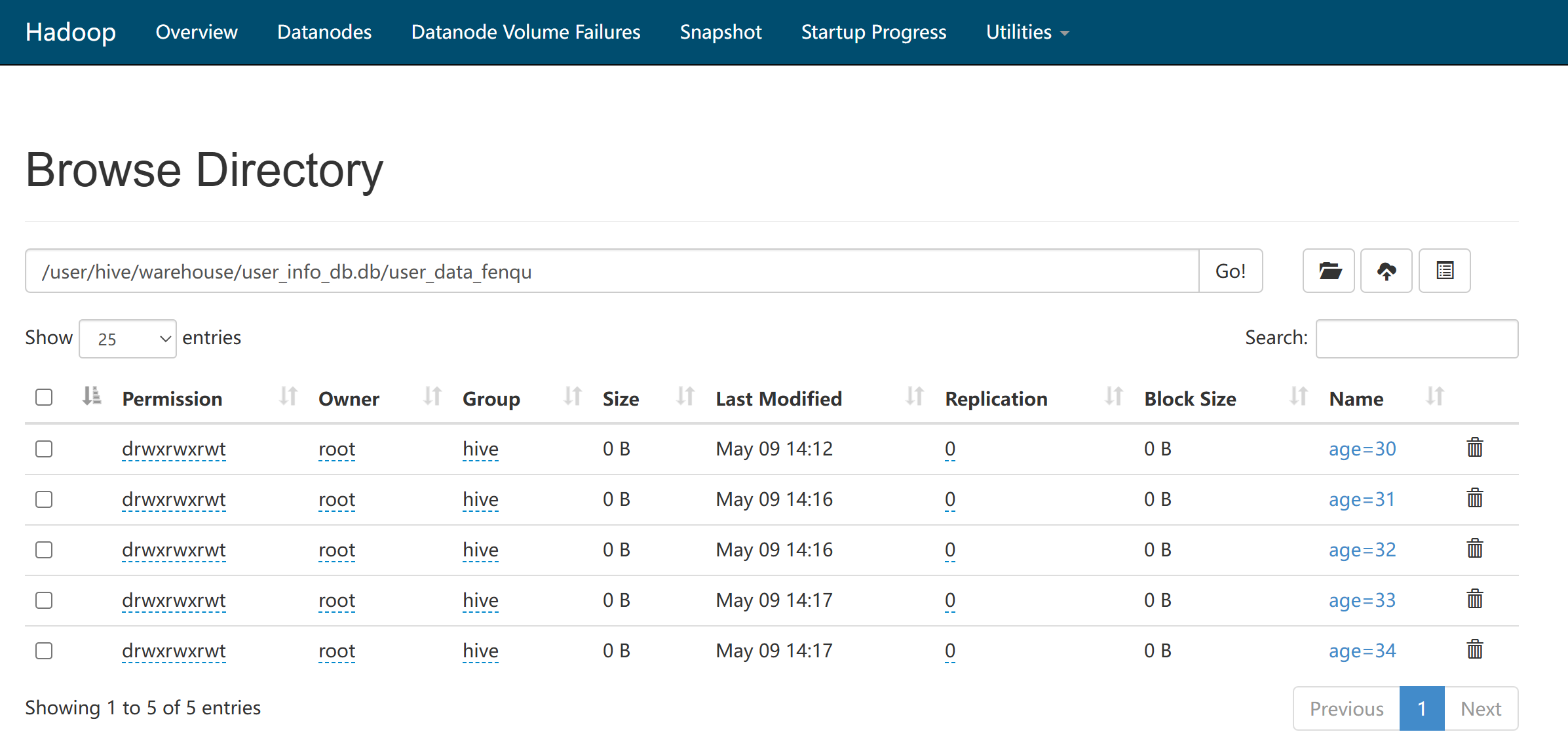

首次使用全量迁移

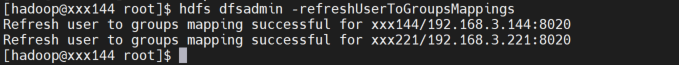

执行前在目前集群设置用户 root 具备 hadoop 执行权限,操作如下(在目标集群 NameNode Active 节点执行)

groupadd supergroup

usermod -a -G supergroup root

su - hadoop -s /bin/bash -c "hdfs dfsadmin -refreshUserToGroupsMappings"

hadoop distcp

-ptug

-overwrite

-skipcrccheck

-strategy dynamic

hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/user_data_fenqu

hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db/上述命令在源集群节点执行,如果没有权限,则按照上面命令添加

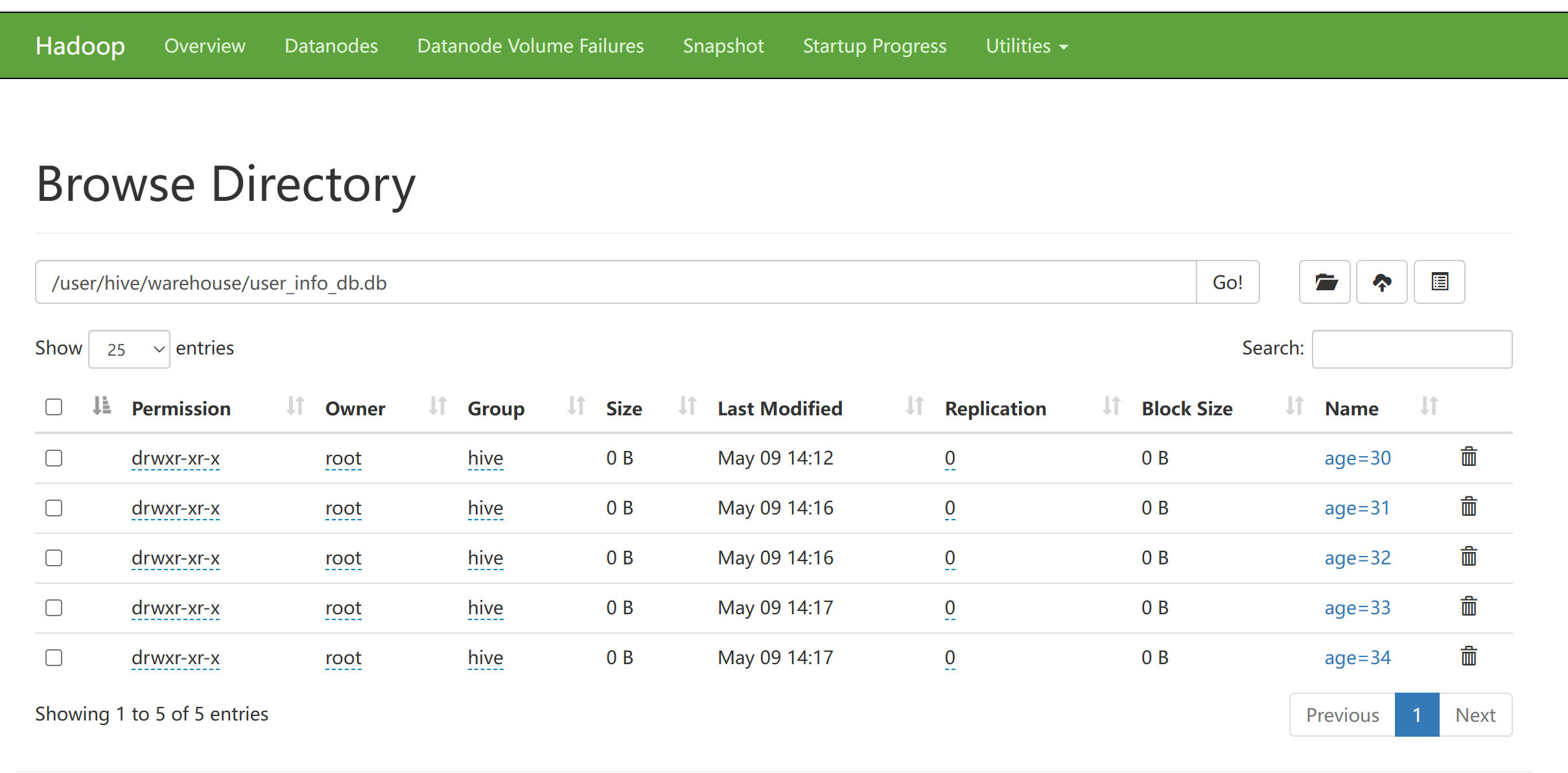

目标集群目录显示如下,其中时间戳与源集群一致

源集群的 user_data_fenqu 添加新数据,再次测试

[root@cdh02 分区]# sh table.sh

请输入要生成的数据条数: 10000

请输入要插入的年龄分区: 35

生成了 10000 条模拟数据到 /tmp/user_data.csv

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.2.1-1.cdh6.2.1.p0.1425774/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.2.1-1.cdh6.2.1.p0.1425774/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-6.2.1-1.cdh6.2.1.p0.1425774/jars/hive-common-2.1.1-cdh6.2.1.jar!/hive-log4j2.properties Async: false

OK

Time taken: 1.864 seconds

Loading data to table user_info_db.user_data_fenqu partition (age=35)

OK

Time taken: 2.577 seconds

10000 条数据已成功插入到 Hive 表的年龄 35 分区中

脚本执行完毕此时源集群新增了时间戳为 May 09 15:18的数据,之后执行增量迁移

hadoop distcp

-ptug

-update

-skipcrccheck

-strategy dynamic

-delete

hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/user_data_fenqu

hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db/消耗时间为 1 min map tasks=12

总结:当源集群文件大小没发生变化时,--update 只迁移新增加的文件

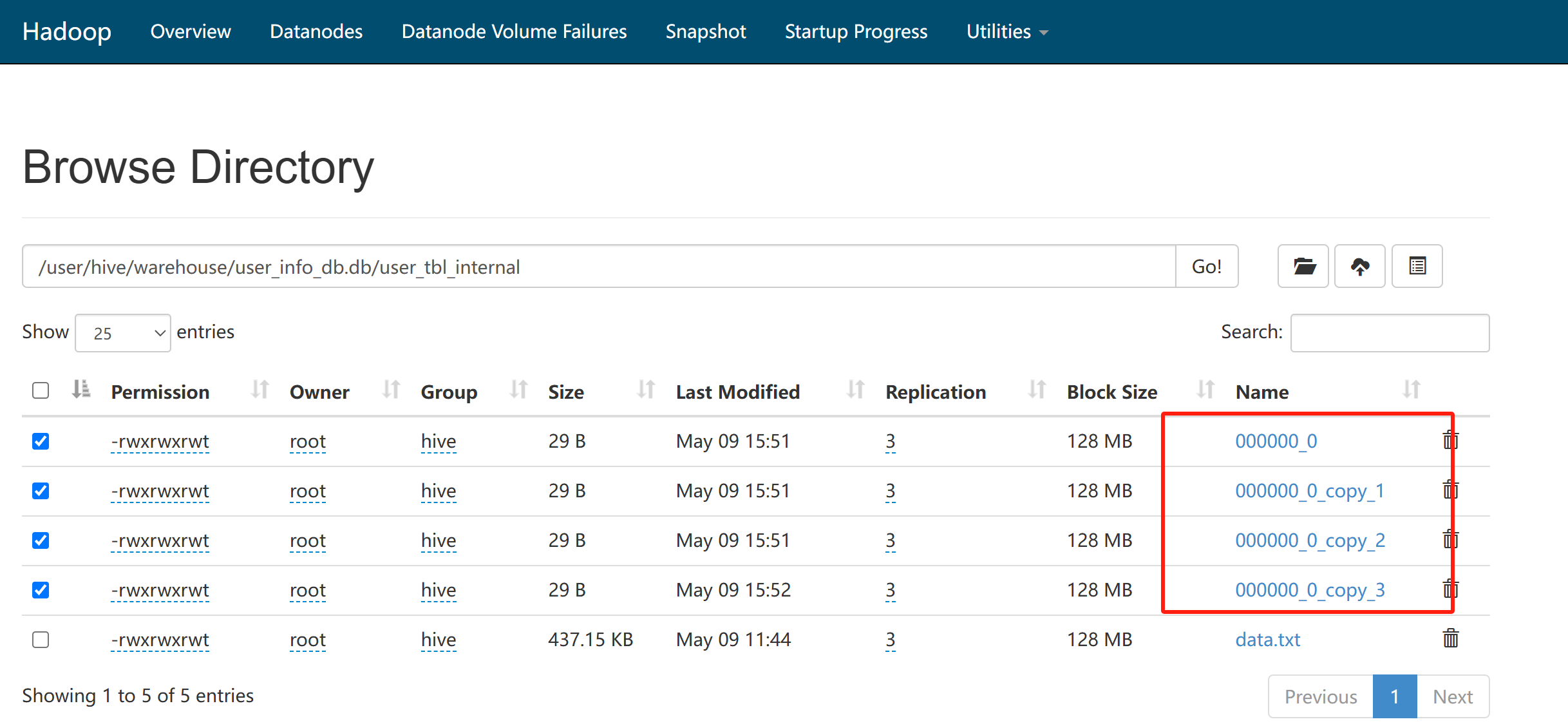

在执行全量迁移到目标集群后,源集群某张表 insert 了部分数据,此时 hdfs 目录的变化

如上图所示,每次执行 insert 的时候,集群会产生一个文件,对于 distcp 来讲,该部分属于新增文件。

写入迁移测试(迁移时运行业务)

首先将源集群的数据库 user_info_db 全量迁移到目标集群, 大约 1G 数据。

[root@cdh02 data]# hdfs dfs -du -h /user/hive/warehouse/user_info_db.db

484.3 M 1.4 G /user/hive/warehouse/user_info_db.db/dest_table

1.5 K 4.5 K /user/hive/warehouse/user_info_db.db/insert_test

484.3 M 1.4 G /user/hive/warehouse/user_info_db.db/source_table

2.6 M 7.8 M /user/hive/warehouse/user_info_db.db/user_data_fenqu

437.2 K 1.3 M /user/hive/warehouse/user_info_db.db/user_tbl_ext

0 0 /user/hive/warehouse/user_info_db.db/user_tbl_ext_parti

437.3 K 1.3 M /user/hive/warehouse/user_info_db.db/user_tbl_internal

[root@cdh02 data]# hadoop distcp -ptug -strategy dynamic hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db

25/05/10 21:57:11 INFO tools.DistCp: Input Options: DistCpOptions{atomicCommit=false, syncFolder=false, deleteMissing=false, ignoreFailures=false, overwrite=false, append=false, useDiff=false, useRdiff=false, fromSnapshot=null, toSnapshot=null, skipCRC=false, blocking=true, numListstatusThreads=0, maxMaps=20, mapBandwidth=0.0, copyStrategy='dynamic', preserveStatus=[USER, GROUP, TIMES], atomicWorkPath=null, logPath=null, sourceFileListing=null, sourcePaths=[hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db], targetPath=hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db, filtersFile='null', blocksPerChunk=0, copyBufferSize=8192, verboseLog=false}, sourcePaths=[hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db], targetPathExists=true, preserveRawXattrsfalse

25/05/10 21:57:11 INFO client.RMProxy: Connecting to ResourceManager at cdh02/192.16

...

/05/10 21:58:06 INFO mapreduce.Job: map 75% reduce 0%

25/05/10 21:58:09 INFO mapreduce.Job: map 80% reduce 0%

25/05/10 21:58:12 INFO mapreduce.Job: map 85% reduce 0%

25/05/10 21:58:15 INFO mapreduce.Job: map 90% reduce 0%

25/05/10 21:58:18 INFO mapreduce.Job: map 95% reduce 0%

25/05/10 21:58:22 INFO mapreduce.Job: map 100% reduce 0%

25/05/10 21:58:23 INFO mapreduce.Job: Job job_1743415319917_0054 completed successfully结束后,在源集群执行数据写入操作

hive> insert overwrite table dest_table select * from source_table;在写入时开启 dist 同步

[root@cdh02 ~]# hadoop distcp -ptug -strategy dynamic --update hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db

25/05/10 22:03:28 INFO tools.DistCp: Input Options: DistCpOptions{atomicCommit=false, syncFolder=false, deleteMissing=false, ignoreFailures=false, overwrite=false, append=false, useDiff=false, useRdiff=false, fromSnapshot=null, toSnapshot=null, skipCRC=false, blocking=true, numListstatusThreads=0, maxMaps=20, mapBandwidth=0.0, copyStrategy='dynamic', preserveStatus=[USER, GROUP, TIMES], atomicWorkPath=null, logPath=null, sourceFileListing=null, sourcePaths=[hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db], targetPath=hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db, filtersFile='null', blocksPerChunk=0, copyBufferSize=8192, verboseLog=false}, sourcePaths=[hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db], targetPathExists=true, preserveRawXattrsfalse

25/05/10 22:03:28 INFO client.RMProxy: Connecting to ResourceManager at cdh02/192.168.2.149:8032

25/05/10 22:03:28 INFO tools.SimpleCopyListing: Paths (files+dirs) cnt = 36; dirCnt = 18

25/05/10 22:03:28 INFO tools.SimpleCopyListing: Build file listing completed.

25/05/10 22:03:28 INFO Configuration.deprecation: io.sort.mb is deprecated. Instead, use mapreduce.task.io.sort.mb

25/05/10 22:03:28 INFO Configuration.deprecation: io.sort.factor is deprecated. Instead, use mapreduce.task.io.sort.factor

25/05/10 22:03:28 INFO tools.DistCp: Number of paths in the copy list: 36

25/05/10 22:03:28 INFO tools.DistCp: Number of paths in the copy list: 36

25/05/10 22:03:28 INFO client.RMProxy: Connecting to ResourceManager at cdh02/192.168.2.149:8032

25/05/10 22:03:29 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1743415319917_0056

25/05/10 22:03:29 INFO lib.DynamicInputFormat: DynamicInputFormat: Getting splits for job:job_1743415319917_0056

25/05/10 22:03:30 INFO lib.DynamicInputFormat: Number of dynamic-chunk-files created: 36

25/05/10 22:03:30 INFO mapreduce.JobSubmitter: number of splits:20

25/05/10 22:03:30 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

25/05/10 22:03:30 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1743415319917_0056

25/05/10 22:03:30 INFO mapreduce.JobSubmitter: Executing with tokens: []

25/05/10 22:03:30 INFO conf.Configuration: resource-types.xml not found

25/05/10 22:03:30 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

25/05/10 22:03:30 INFO impl.YarnClientImpl: Submitted application application_1743415319917_0056

25/05/10 22:03:30 INFO mapreduce.Job: The url to track the job: http://cdh02:8088/proxy/application_1743415319917_0056/

25/05/10 22:03:30 INFO tools.DistCp: DistCp job-id: job_1743415319917_0056

25/05/10 22:03:30 INFO mapreduce.Job: Running job: job_1743415319917_0056

25/05/10 22:04:01 INFO mapreduce.Job: Job job_1743415319917_0056 running in uber mode : false

25/05/10 22:04:01 INFO mapreduce.Job: map 0% reduce 0%

25/05/10 22:04:05 INFO mapreduce.Job: Task Id : attempt_1743415319917_0056_m_000000_0, Status : FAILED

Error: java.io.IOException: org.apache.hadoop.tools.mapred.RetriableFileCopyCommand$CopyReadException: java.io.FileNotFoundException: File does not exist: hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/dest_table/.hive-staging_hive_2025-05-10_22-03-13_667_3209766124507811984-1/_tmp.-ext-10002

at org.apache.hadoop.tools.mapred.CopyMapper.map(CopyMapper.java:170)

at org.apache.hadoop.tools.mapred.CopyMapper.map(CopyMapper.java:48)任务由于源集群存在写入数据导致失败。

结论:写入时迁移会导致失败

快照迁移测试(迁移时运行业务)

解决源集群业务写入迁移时报错

全量备份前,对数据库做全量快照, 首先允许这个文件路径可以创建 snapshots

hdfs dfsadmin -allowSnapshot /user/hive/warehouse/user_info_db.db创建快照

hdfs dfs -createSnapshot /user/hive/warehouse/user_info_db.db snapshots_full执行迁移

hadoop distcp -ptug --update -skipcrccheck -strategy dynamic hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/.snapshot/snapshot_full/ hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db/注意:只将快照数据迁移到目标集群的数据库下

迁移过程中未出现报错,之后模拟源集群写入数据,迁移增量数据

hive> insert overwrite table dest_table select * from source_table;执行增量快照

hdfs dfs -createSnapshot /user/hive/warehouse/user_info_db.db snapshots_full_1迁移增量数据

hadoop distcp -ptug --update -skipcrccheck -strategy dynamic hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/.snapshot/snapshot_full_1/ hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db/日志显示如下

File Input Format Counters

Bytes Read=9441

File Output Format Counters

Bytes Written=1900

DistCp Counters

Bandwidth in Btyes=169259216

Bytes Copied=507777650

Bytes Expected=507777650

Bytes Skipped=511396113

Files Copied=2 # 增量文件数量

DIR_COPY=13

Files Skipped=15日志中得知只迁移了新增的文件,代表增量迁移成功。

快照方式可以避免写入时报错,但是适合整个库迁移。

参数调优

-Dmapreduce.task.timeout=1800000

-Ddistcp.dynamic.max.chunks.tolerable=30000迁移速度测试

模拟数据 57G,测试迁移速度

[root@cdh02 ~]# date && hadoop distcp -ptug -m 4 --update -skipcrccheck -strategy dynamic hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/.snapshot/snapshots_full_test/ hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db/ && date

Tue May 13 10:50:13 CST 2025

25/05/13 10:50:15 INFO tools.DistCp: Input Options: DistCpOptions{atomicCommit=false, syncFolder=true, deleteMissing=false, ignoreFailures=false, overwrite=false, append=false, useDiff=false, useRdiff=false, fromSnapshot=null, toSnapshot=null, skipCRC=true, blocking=true, numListstatusThreads=0, maxMaps=4, mapBandwidth=0.0, copyStrategy='dynamic', preserveStatus=[USER, GROUP, TIMES], atomicWorkPath=null, logPath=null, sourceFileListing=null, sourcePaths=[hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/.snapshot/snapshots_full_test], targetPath=hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db, filtersFile='null', blocksPerChunk=0, copyBufferSize=8192, verboseLog=false}, sourcePaths=[hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/.snapshot/snapshots_full_test], targetPathExists=true, preserveRawXattrsfalse

25/05/13 10:50:15 INFO client.RMProxy: Connecting to ResourceManager at cdh02/192.168.2.149:8032

25/05/13 10:50:15 INFO tools.SimpleCopyListing: Paths (files+dirs) cnt = 44; dirCnt = 20

25/05/13 10:50:15 INFO tools.SimpleCopyListing: Build file listing completed.

25/05/13 10:50:15 INFO Configuration.deprecation: io.sort.mb is deprecated. Instead, use mapreduce.task.io.sort.mb

25/05/13 10:50:15 INFO Configuration.deprecation: io.sort.factor is deprecated. Instead, use mapreduce.task.io.sort.factor

25/05/13 10:50:15 INFO tools.DistCp: Number of paths in the copy list: 44

25/05/13 10:50:15 INFO tools.DistCp: Number of paths in the copy list: 44

25/05/13 10:50:15 INFO client.RMProxy: Connecting to ResourceManager at cdh02/192.168.2.149:8032

25/05/13 10:50:15 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1747103211192_0004

25/05/13 10:50:15 INFO lib.DynamicInputFormat: DynamicInputFormat: Getting splits for job:job_1747103211192_0004

25/05/13 10:50:16 INFO lib.DynamicInputFormat: Number of dynamic-chunk-files created: 8

25/05/13 10:50:16 INFO mapreduce.JobSubmitter: number of splits:4

25/05/13 10:50:16 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

25/05/13 10:50:16 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1747103211192_0004

25/05/13 10:50:16 INFO mapreduce.JobSubmitter: Executing with tokens: []

25/05/13 10:50:16 INFO conf.Configuration: resource-types.xml not found

25/05/13 10:50:16 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

25/05/13 10:50:16 INFO impl.YarnClientImpl: Submitted application application_1747103211192_0004

25/05/13 10:50:16 INFO mapreduce.Job: The url to track the job: http://cdh02:8088/proxy/application_1747103211192_0004/

25/05/13 10:50:16 INFO tools.DistCp: DistCp job-id: job_1747103211192_0004

25/05/13 10:50:16 INFO mapreduce.Job: Running job: job_1747103211192_0004

25/05/13 10:50:22 INFO mapreduce.Job: Job job_1747103211192_0004 running in uber mode : false

25/05/13 10:50:22 INFO mapreduce.Job: map 0% reduce 0%

25/05/13 10:50:41 INFO mapreduce.Job: map 25% reduce 0%

25/05/13 10:52:30 INFO mapreduce.Job: map 50% reduce 0%

25/05/13 10:55:01 INFO mapreduce.Job: map 51% reduce 0%

25/05/13 10:56:26 INFO mapreduce.Job: map 75% reduce 0%

25/05/13 10:58:13 INFO mapreduce.Job: map 100% reduce 0%

25/05/13 10:58:13 INFO mapreduce.Job: Job job_1747103211192_0004 completed successfully

25/05/13 10:58:13 INFO mapreduce.Job: Counters: 40

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=895540

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=43204326515

HDFS: Number of bytes written=43204316672

HDFS: Number of read operations=250

HDFS: Number of large read operations=0

HDFS: Number of write operations=123

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=464448

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=464448

Total vcore-milliseconds taken by all map tasks=464448

Total megabyte-milliseconds taken by all map tasks=475594752

Map-Reduce Framework

Map input records=44

Map output records=10

Input split bytes=540

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=2062

CPU time spent (ms)=151030

Physical memory (bytes) snapshot=1068793856

Virtual memory (bytes) snapshot=10533302272

Total committed heap usage (bytes)=846725120

Peak Map Physical memory (bytes)=409997312

Peak Map Virtual memory (bytes)=2637160448

File Input Format Counters

Bytes Read=10624

File Output Format Counters

Bytes Written=1321

DistCp Counters

Bandwidth in Btyes=360237234

Bytes Copied=43204315351

Bytes Expected=43204315351

Bytes Skipped=19239995854

Files Copied=14

DIR_COPY=20

Files Skipped=10

Tue May 13 10:58:13 CST 2025耗时:8min

速度:121MB/s

数据一致性校验

校验 HDFS 数据目录文件数量是否一致

[root@cdh01 ~]# hadoop fs -count -v -x /user/hive/warehouse/user_info_db.db

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

14 17 1019173763 /user/hive/warehouse/user_info_db.db上述显示源集群数据:目录数、文件数、字节数

目前集群执行结果

[root@xxx235 mysql]# hadoop fs -count -v -x /user/hive/warehouse/user_info_db.db

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

14 17 1019173763 /user/hive/warehouse/user_info_db.db迁移 Hive 元数据

导出 hive 元数据

[root@cdh01 bin]# ./mysqldump -hlocalhost -uroot -p --databases metastore > metastore.sql修改元数据集群信息

方式 1:

采用 sed 修改 metastore.sql 中的表 sds、dbs的 location 信息

方式二:

导入新集群后,执行 update 语句

UPDATE sds

SET location = REPLACE(location, 'master:8020', 'cluster:8020')

WHERE location LIKE '%master:8020%';

UPDATE dbs

SET db_location_url = REPLACE(db_location_url, 'master:8020', 'cluster:8020')

WHERE db_location_url LIKE '%master:8020%';导入新集群的数据库

create database hive_metastore;

use hive_metastore;

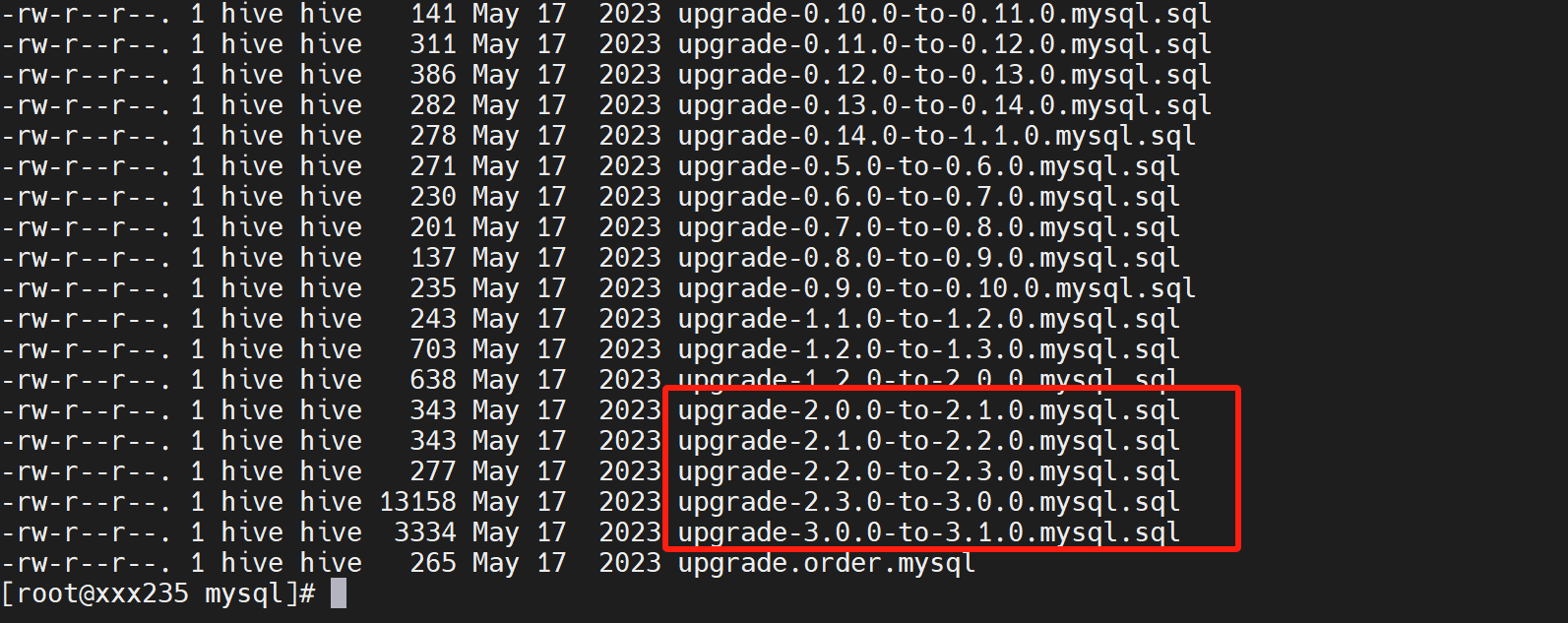

source metastore.sql;采用 hive3.1 自带升级脚本, 可以把 /srv/dstore/1.0.0.0/hive/scripts/metastore/upgrade/mysql 目录 copy 到 mysql 部署节点

登录 mysql,按照版本逐级执行

注意:执行过程中由于官方提供 sql 注释格式错误,会报关于注释的错误,无须处理。

use hive_metastore;

sourc upgrade-2.0.0-2.1.0.mysql.sql;

...

source upgrade-3.0.0-3.1.0.mysql.sql;执行后,客户端登录 hive,执行查询测试

select count(*) from xxx;迁移脚本

对于数据量比较大的场景,可以细化到表去迁移

首先通过查询语句导出指定数据库的所有表名称

hive -e "use user_info_db;show tables" | grep -v SLF4J > all_table.txt查看结果

[root@cdh01 ~]# cat all_table.txt

dest_table

insert_test

source_table

user_data_fenqu

user_tbl_ext

user_tbl_ext_parti

user_tbl_internal编写 shell 迁移脚本

#!/bin/bash

# Hive 集群数据迁移脚本

# 用法:# 全量迁移:./migrate_hive.sh full

# 增量迁移:./migrate_hive.sh incremental

# 从指定表开始迁移:./migrate_hive.sh full|incremental 表名

# 配置文件

TABLE_LIST_FILE="table.txt" # 包含所有表名的文件

MIGRATED_LIST_FILE="migrated_tables.txt" # 已迁移表的记录

SUCCESS_FILE="migration_success.txt" # 迁移成功的表记录

FAILURE_FILE="migration_failure.txt" # 迁移失败的表记录

PROGRESS_FILE="migration_progress.txt" # 迁移进度记录

SOURCE_NN="192.168.2.149:8020" # 源 HDFS NameNode 地址

DEST_NN="192.168.3.144:8020" # 目标 HDFS NameNode 地址

DATABASE=user_info_db.db # 迁移数据库

SOURCE_PATH="/user/hive/warehouse/${DATABASE}" # 源 HDFS 路径

DEST_PATH="/user/hive/warehouse/${DATABASE}_1" # 目标 HDFS 路径

LOG_DIR="/hive_move/logs" # 日志目录

MAPPERS=80 # distcp 使用的 mapper 数量

Bandwidth="100" # 设置带宽

# 确保日志目录存在

mkdir -p "$LOG_DIR"

# 确保记录文件存在

touch "$MIGRATED_LIST_FILE" "$SUCCESS_FILE" "$FAILURE_FILE" "$PROGRESS_FILE"

# 获取迁移模式(全量或增量)MIGRATION_MODE="$1"

if [-z "$MIGRATION_MODE"]; then

echo "错误: 请提供迁移模式: full(全量) 或 incremental(增量)"

echo "用法: $0 [full|incremental] [起始表名(可选)]"

exit 1

fi

# 检查 table.txt 文件是否存在

if [! -f "$TABLE_LIST_FILE"]; then

echo "错误: 找不到表列表文件: $TABLE_LIST_FILE"

exit 1

fi

# 检查是否提供了起始表名

START_TABLE="$2"

START_MIGRATION=false

if [-z "$START_TABLE"]; then

# 没有提供起始表,从头开始

START_MIGRATION=true

echo "将从第一个表开始迁移"

else

echo "将从表 $START_TABLE 开始迁移"

# 检查起始表是否存在于表列表中

if ! grep -q "^$START_TABLE$" "$TABLE_LIST_FILE"; then

echo "错误: 表 $START_TABLE 不在表列表文件中"

exit 1

fi

fi

# 获取待迁移的表列表

if ["$MIGRATION_MODE" == "full"]; then

echo "执行全量迁移模式"

# 全量迁移: 迁移表列表文件中的所有表

TABLES=$(cat "$TABLE_LIST_FILE")

elif ["$MIGRATION_MODE" == "incremental"]; then

echo "执行增量迁移模式"

# 增量迁移: 只迁移尚未成功迁移的表

TABLES=$(comm -23 <(sort "$TABLE_LIST_FILE") <(sort "$SUCCESS_FILE"))

else

echo "错误: 无效的迁移模式,请使用'full'或'incremental'"

exit 1

fi

if [-z "$TABLES"]; then

echo "没有表需要迁移"

exit 0

fi

# 设置陷阱,确保在脚本被中断时记录当前进度

trap 'echo" 脚本被中断,最后处理的表记录在 $PROGRESS_FILE"; exit 1' INT TERM

echo "准备迁移的表数量: $(echo"$TABLES"| wc -l)"

echo "-----------------------------------"

# 遍历每个表进行迁移

for TABLE in $TABLES; do

# 如果指定了起始表,检查是否已到达起始表

if ["$START_MIGRATION" == "false"]; then

if ["$TABLE" == "$START_TABLE"]; then

START_MIGRATION=true

else

continue

fi

fi

# 记录当前处理的表

echo "$TABLE" > "$PROGRESS_FILE"

# 检查当前表是否已经迁移成功,如果是则跳过

if grep -q "^$TABLE$" "$SUCCESS_FILE"; then

echo "表 $TABLE 已成功迁移,跳过..."

continue

fi

echo "$(date'+%Y-%m-%d %H:%M:%S') - 开始迁移表: $TABLE"

# 为每个表创建单独的日志文件

TABLE_LOG="$LOG_DIR/${TABLE}_$(date'+%Y%m%d%H%M%S').log"

# 使用 hadoop distcp 命令进行迁移

echo "执行命令: hadoop distcp -m $MAPPERS -bandwidth ${Bandwidth} -pugp -strategy dynamic -skipcrccheck -delete -update hdfs://$SOURCE_NN$SOURCE_PATH/$TABLE hdfs://$DEST_NN$DEST_PATH/$TABLE"

# 执行迁移命令并记录日志

hadoop distcp -m $MAPPERS -bandwidth ${Bandwidth} -ptug -strategy dynamic -skipcrccheck -delete -update

hdfs://$SOURCE_NN$SOURCE_PATH/$TABLE hdfs://$DEST_NN$DEST_PATH/$TABLE > "$TABLE_LOG" 2>&1

MIGRATION_RESULT=$?

if [$MIGRATION_RESULT -eq 0]; then

echo "$(date'+%Y-%m-%d %H:%M:%S') - 迁移成功: $TABLE"

echo "$TABLE" >> "$SUCCESS_FILE"

echo "$TABLE" >> "$MIGRATED_LIST_FILE"

else

echo "$(date'+%Y-%m-%d %H:%M:%S') - 迁移失败: $TABLE,错误码: $MIGRATION_RESULT"

echo "详细日志请查看: $TABLE_LOG"

echo "$TABLE" >> "$FAILURE_FILE"

# 可选: 在失败时退出脚本

echo "由于表 $TABLE 迁移失败,脚本终止。可以通过以下命令从失败的表继续执行:"

echo "$0 $MIGRATION_MODE $TABLE"

exit 1

fi

echo "-----------------------------------"

done

# 清除进度文件

rm -f "$PROGRESS_FILE"

echo "迁移任务完成!"

echo "成功迁移的表记录在: $SUCCESS_FILE"

echo "失败迁移的表记录在: $FAILURE_FILE"

# 显示统计信息

TOTAL_TABLES=$(cat "$TABLE_LIST_FILE" | wc -l)

SUCCESS_COUNT=$(cat "$SUCCESS_FILE" | wc -l)

FAILURE_COUNT=$(cat "$FAILURE_FILE" | wc -l)

echo "统计信息:"

echo "总表数: $TOTAL_TABLES"

echo "成功迁移表数: $SUCCESS_COUNT"

echo "失败迁移表数: $FAILURE_COUNT"

echo "剩余表数: $((TOTAL_TABLES - SUCCESS_COUNT))"

如果是迁移快照,则

SOURCE_PATH=hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/.snapshot/snapshot_full_1/

测试全量迁移

[root@cdh02 ~]# bash test.sh full

将从第一个表开始迁移

执行全量迁移模式

准备迁移的表数量: 6

-----------------------------------

2025-05-12 11:43:18 - 开始迁移表: dest_table

执行命令: hadoop distcp -m 80 -bandwidth 100 -pugp -strategy dynamic -skipcrccheck -delete -update hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/dest_table hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db_1/dest_table

2025-05-12 11:43:36 - 迁移成功: dest_table

-----------------------------------

2025-05-12 11:43:36 - 开始迁移表: insert_test

执行命令: hadoop distcp -m 80 -bandwidth 100 -pugp -strategy dynamic -skipcrccheck -delete -update hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/insert_test hdfs://192.168.3.144:8020/user/hive/wareh测试增量迁移(当中途迁移失败,从当前表开始)

[root@cdh02 ~]# bash test.sh incremental user_data_fenqu

将从表 user_data_fenqu 开始迁移

执行增量迁移模式

准备迁移的表数量: 3

-----------------------------------

2025-05-12 11:45:53 - 开始迁移表: user_data_fenqu

执行命令: hadoop distcp -m 80 -bandwidth 100 -pugp -strategy dynamic -skipcrccheck -delete -update hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/user_data_fenqu hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db_1/user_data_fenqu

2025-05-12 11:46:44 - 迁移成功: user_data_fenqu

-----------------------------------

2025-05-12 11:46:44 - 开始迁移表: user_tbl_ext

执行命令: hadoop distcp -m 80 -bandwidth 100 -pugp -strategy dynamic -skipcrccheck -delete -update hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/user_tbl_ext hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db_1/user_tbl_ext

2025-05-12 11:47:01 - 迁移成功: user_tbl_ext

-----------------------------------

2025-05-12 11:47:01 - 开始迁移表: user_tbl_internal

执行命令: hadoop distcp -m 80 -bandwidth 100 -pugp -strategy dynamic -skipcrccheck -delete -update hdfs://192.168.2.149:8020/user/hive/warehouse/user_info_db.db/user_tbl_internal hdfs://192.168.3.144:8020/user/hive/warehouse/user_info_db.db_1/user_tbl_internal

2025-05-12 11:47:30 - 迁移成功: user_tbl_internal

-----------------------------------

迁移任务完成!

成功迁移的表记录在: migration_success.txt

失败迁移的表记录在: migration_failure.txt

统计信息:

总表数: 6

成功迁移表数: 6

失败迁移表数: 0

剩余表数: 0注意:当迁移的源目录下不存在文件,则会报文件不存在,失败。